In modern AI applications, efficient communication between models and applications is crucial. Integration challenges often emerge when dealing with a mix of tools and technologies, especially when it comes to exchanging contextual information reliably and flexibly. The Model Context Protocol (MCP) [https://modelcontextprotocol.io] addresses this by defining a standardized, language-independent interface through which applications can deliver contextual information to AI models and receive their responses.

Originally developed by Anthropic [https://www.anthropic.com/news/model-context-protocol], MCP builds on concepts from the Language Server Protocol (LSP) and provides a similarly universal and flexible foundation for AI-based systems. MCP servers can be deployed in various ways: as an OCI container (from the Docker catalog [https://www.docker.com/products/mcp-catalog-and-toolkit/]), as a WebAssembly module (e.g. via mcp.run), or as a conventional application implemented in any programming language.

Spring AI [https://docs.spring.io/spring-ai/reference/api/mcp/mcp-overview.html], which recently integrated MCP, offers a convenient Java-based approach for consuming MCP servers directly within Spring Boot applications and implementing custom MCP servers.

STAY TUNED!

Learn more about JAX London

Technical Foundations of MCP

The Model Context Protocol is designed to provide a standardized, well-defined interface for communication between applications and AI models. It follows a client-server architecture similar to the Language Server Protocol (LSP), which is already established as a standard in development environments. MCP defines a clear separation of responsibilities between client and server:

- MCP-Server: Processes incoming requests and returns contextual information or results. Typical responsibilities include providing data from databases, file systems, ticketing systems, or external AI models.

- MCP-Client: Sends requests to the MCP server and processes the responses. Examples include development environments, web applications, or other AI tools that rely on contextual information to perform tasks.

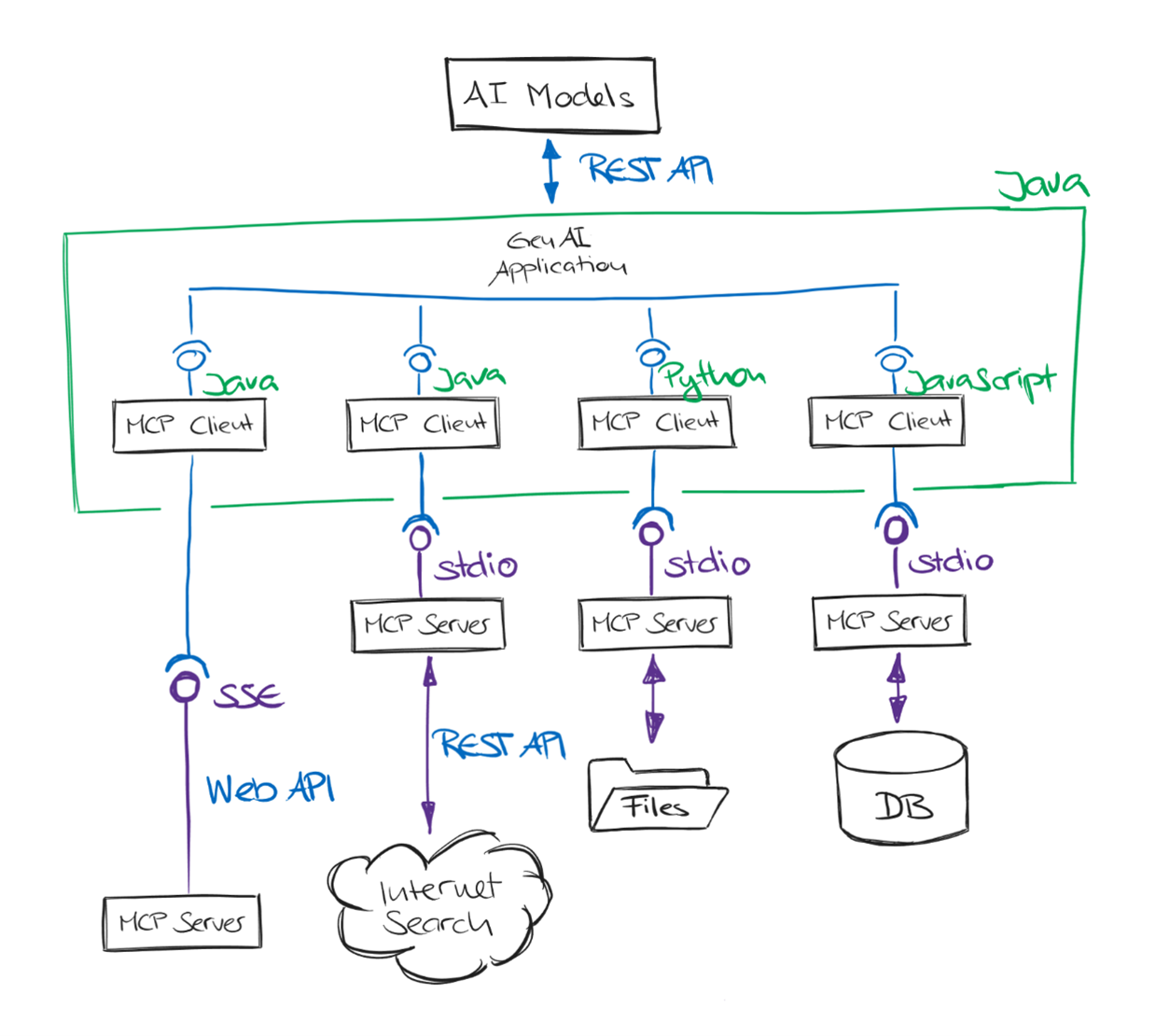

MCP supports multiple transport types for communication between client and server (Fig. 1). For interaction with local processes and command-line tools, it offers the Standard input/output (stdio) transport type, which exchanges requests and responses via the standardized input and output streams (stdin/stdout). Alternatively, the Server-Sent Events (SSE) transport type is available for HTTP-based communication, enabling MCP clients and servers to connect over the network.

MCP uses a standardized protocol format, typically based on JSON-RPC. This simplifies the development of new MCP clients and servers while ensuring broad compatibility across different systems.

Figure 1: MCP client and server architecture

Flexible Deployment of MCP Servers

A key advantage of the Model Context Protocol is its flexibility regarding MCP server deployment. Developers can seamlessly integrate MCP into existing system landscapes, using precisely the technologies that fit their requirements. Existing software systems can also be exposed to AI applications via an MCP server.

They can be used both locally as command-line applications and remotely via HTTP. Thanks to the standardized interfaces, MCP servers can be seamlessly integrated into development environments such as Visual Studio Code or IntelliJ IDEA, as well as application frameworks such as Spring Boot with Spring AI, as often shown in demos. Depending on the situation, MCP servers can be used as content retrievers to supply AI models with the required context data.

Integrating MCP in Spring AI

The MCP Java SDK [https://modelcontextprotocol.io/sdk/java/mcp-overview] provides a full implementation of the Model Context Protocol for Java. It enables standardized communication between AI models and external tools, supporting both synchronous and asynchronous communication patterns. The SDK allows developers to create their own MCP clients and servers.

Spring AI MCP extends the MCP Java SDK by tightly integrating with the Spring ecosystem. Using the provided starters for Spring Boot, both MCP clients and servers can be developed easily and efficiently. This simplifies the integration of existing tools with AI models and the provision of custom context sources within AI applications.

The Spring Initializer makes it easy to set up projects with the necessary Spring AI MCP support. Developers benefit from familiar Spring Boot features like auto-configuration and simple management of connections and services.

Integrating a Node-based MCP Server in Spring AI

Spring AI also supports integrating external MCP servers implemented in other languages, such as Node.js. In this example, an MCP server for weather queries (@h1deya/mcp-server-weather) is integrated into a Spring Boot application via stdio and accessed through a chat endpoint.

Two dependencies are required for the application to communicate with MCP servers and to interact with OpenAI models (Listing 1):

- spring-ai-starter-mcp-client: Enables communication with MCP servers through the MCP Java SDK.

- spring-ai-starter-model-openai: Integrates OpenAI models, such as GPT-4o-mini, into the Spring AI infrastructure.

Listing 1: Maven dependencies for Spring AI and the MCP client

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-client</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-openai</artifactId>

</dependency>

The application.yaml file (Listing 2) configures the connection to a local Node-based MCP server, which is automatically started using npx. The configuration options command: npx and args: -y,@h1deya/mcp-server-weather [https://github.com/hideya/mcp-server-weather-js] launch the MCP server directly from the Java process using the Node.js module.

This configuration makes sure that the MCP server is automatically started with the application and can be accessed via stdio. Using the corresponding Java API, the MCP client can also be configured and used automatically.

Listing 2: application.yml, to configure the McpSyncClient

spring:

application:

name: mcp-client-weather

ai:

openai:

api-key: ${OPENAI_API_KEY}

chat:

options:

model: gpt-4o-mini

mcp:

client:

stdio:

connections:

weather:

command: npx

args: -y,@h1deya/mcp-server-weather

The WeatherController (Listing 3) implements a REST endpoint that accepts user requests and processes them using Spring AI:

- The constructor sets up a ChatClient that is, by default, specialized in weather-related questions.

- The external MCP server is integrated as a tool (MCP client) via defaultTools.

- The /ask endpoint receives a question and returns an AI-generated answer, which is supplemented by the MCP server if necessary.

This setup combines OpenAI’s general-purpose language model with the domain-specific intelligence of an MCP-backed weather service and can be flexibly extended with additional tools.

Listing 3: Integrating the McpSyncClient with the OpenAI ChatClient

@RestController

public class WeatherController {

private final ChatClient chatClient;

public WeatherController(

ChatClient.Builder chatClientBuilder,

List<McpSyncClient> mcpSyncClients) {

this.chatClient = chatClientBuilder

.defaultSystem("You are a weather assistant ...")

.defaultTools(new SyncMcpToolCallbackProvider(mcpSyncClients))

.build();

}

@PostMapping("/ask")

public Answer ask(@RequestBody Question question) {

return chatClient.prompt()

.user(question.question())

.call()

.entity(Answer.class);

}

}

Building a custom MCP server with Spring Boot

In addition to consuming external MCP servers, Spring AI also supports developing custom MCP servers based on Spring Boot. This example demonstrates building a simple weather service that provides weather data through the German Weather Service (DWD) API. The following Maven dependency is required to develop an MCP server:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-server-webmvc</artifactId>

</dependency>

It provides the necessary components to receive and process requests according to the Model Context Protocol over HTTP using Server-Sent Events (SSE).

The MCP server is configured via application.yaml (Listing 4), where name and version define the server metadata transmitted during MCP communication.

Listing 4: application.yml to configure the MCP server

spring:

ai:

mcp:

server:

name: dwd-weather-server

version: 0.0.1

The following configuration class (Listing 5) registers the offered tools:

- The weatherTools method registers the WeatherService as a collection of tools accessible via MCP.

- Additionally, toUpperCase defines a simple tool that converts text to uppercase – an example of generic functionality.

Listing 5: Registering Tools

@Bean

ToolCallbackProvider weatherTools(WeatherService weatherService) {

return MethodToolCallbackProvider.builder()

.toolObjects(weatherService)

.build();

}

@Bean

ToolCallback toUpperCase() {

return FunctionToolCallback.builder("toUpperCase", (TextInput input) -> input.input().toUpperCase())

.inputType(TextInput.class)

.description("Put the text to upper case.")

.build();

}

The actual logic is provided in the WeatherService (Listing 6). Both methods are annotated with the @Tool annotation, which automatically makes them available for communication through MCP. Input parameters are described using @ToolParam, enabling a more precise semantic description for AI models.

Listing 6: Logic is provided in the WeatherService

@Service

public class WeatherService {

@Tool(description = "Find and retrieve the station_id for weather stations")

public String getStationIds(@ToolParam(

description = "Station is typically just city") String station){

// business logic

return stationId;

}

@Tool(description = "Retrieve current and forecast weather information.")

public String getStationOverview(@ToolParam(

description = "List of station ids to resolve weather forecast.")

List<String> stationIds){

// business logic

return stationOverview;

}

}

The created MCP server provides the registered tools by default via SSE on HTTP port 8080 (the default port of Spring Boot). Incoming requests are handled according to the MCP, with both requests and responses exchanged in the defined JSON format.

With the help of any MCP client that supports SSE, the tools offered can now be consumed. By using SSE, the MCP server enables efficient, bidirectional communication with AI models and other applications, regardless of their implementation language or environment.

Prompt Enrichment

By providing specialized tools through a dedicated MCP server, the Model Context Protocol can be specifically used to enrich prompts. In many AI applications, passing only the actual user query to the model is not sufficient. The quality and relevance of responses improve significantly when additional contextual information is provided. This technique is known as “prompt stuffing” or “prompt enrichment”. This is especially important for real-time or private data. Tools integrated as MCP servers can deliver information from external systems to support the model in generating responses.

As demonstrated in the previous section, Spring AI and Spring Boot simplify the configuration of both clients and servers. A typical example is the weather service introduced earlier. Here, the language model is enriched with current weather data before generating a response. Spring AI uses the concept of tools within the chat client for this. Tools can be either locally defined services or external MCP servers.

When creating a prompt, the ChatClient first invokes the defined tools and enriches the user input with their results. Only after that, the complete enriched prompt is passed to the AI model.

Procedure Example:

A typical procedure proceeds as follows:

- User question: “What will the weather be like tomorrow in Kempten?”

- LLM: The model selects the MCP interfaces to retrieve the data.

- Tool call: The MCP server provides current weather data for Kempten.

- Enrichment: The weather data is incorporated into the prompt along with the original question.

- Answer generation: The AI model generates an answer based on the enriched prompt. This may also loop back to step 2.

Spring AI provides insights into this process through its logging functionality. The logs reveal how the original prompt was enriched with the results from the tools (Listing 7).

Listing 7: Example log for prompt enrichment

2025-05-12T10:47:05.303+02:00 DEBUG 3175 --- [mcp-client-weather-sse] [nio-8081-exec-2] o.s.web.client.DefaultRestClient : Writing [ChatCompletionRequest[messages=[ChatCompletionMessage[rawContent=

What is the weather forecast for Kempten for tomorrow?, role=USER, name=null, toolCallId=null, toolCalls=null, refusal=null, audioOutput=null], ChatCompletionMessage[rawContent=

You are an assistant for the analysis of weather data. You receive structured forecast data from various weather stations.

Data units:

- Temperature in 0,1 °C

- Start time Unix time (ms)

- Time interval (ms)

- Total precipitation in 0,1 mm/h

- Precipitation per day in 0,1 mm/d

- Sunshine in 0,1 min

- Humidity in 0,1 %

- Dew point in 0,1 °C (2 m height)

- Air pressure in 0,1 hPa (ground level)

Instructions:

- Convert all values into readable units.

- Identify and describe trends.

- Comment on missing values (null).

- Summarize temperature, precipitation, sunshine, humidity, dew point, wind (if present), and air pressure separately.

- Finally, provide a brief weather summary covering the weather conditions, temperature development, comfort level, and any notable events).

Formulate your answers clearly, step by step, and in complete sentences. Maintain a friendly, precise, and informative tone.

, role=SYSTEM, name=null, toolCallId=null, toolCalls=null, refusal=null, audioOutput=null], ChatCompletionMessage[rawContent= What is the weather forecast for Kempten for tomorrow?

Your response should be in JSON format.

Do not include any explanations, only provide a RFC8259 compliant JSON response following this format without deviation.

Do not include markdown code blocks in your response.

Remove the ```json markdown from the output.

Here is the JSON Schema instance your output must adhere to:

```{

"$schema" : "https://json-schema.org/draft/2020-12/schema",

"type" : "object",

"properties" : {

"answer" : {

"type" : "string"

}

},

"additionalProperties" : false

}```

, role=USER, name=null, toolCallId=null, toolCalls=null, refusal=null, audioOutput=null], ChatCompletionMessage[rawContent=null, role=ASSISTANT, name=null, toolCallId=null, toolCalls=[ToolCall[index=null, id=call_4ggmsbY1Kg3WwopK6JEtlldc, type=function, function=ChatCompletionFunction[name=spring_ai_mcp_client_weather_getStationIds, arguments={"station":"Kempten"}]]], refusal=null, audioOutput=null], ChatCompletionMessage[rawContent=[{"text":"\"StationId: 02559, Stationsname: Kempten, Bundesland: Bayern\\n\""}], role=TOOL, name=spring_ai_mcp_client_weather_getStationIds, toolCallId=call_4ggmsbY1Kg3WwopK6JEtlldc, toolCalls=null, refusal=null, audioOutput=null], ChatCompletionMessage[rawContent=null, role=ASSISTANT, name=null, toolCallId=null, toolCalls=[ToolCall[index=null, id=call_3Q3csYoAzo2qPsHKFOMbFQ1L, type=function, function=ChatCompletionFunction[name=spring_ai_mcp_client_weather_getStationOverview, arguments={"stationIds":["02559"]}]]], refusal=null, audioOutput=null], ChatCompletionMessage[rawContent=[{"text":"\"StationOverview{stations={02559=StationData[forecast1=Forecast[stationId=02559, start=1747000800000, timeStep=3600000, temperature=[…, 115, 123, 133, …], windSpeed=null, windDirection=null, windGust=null, precipitationTotal=[32767, 32767, …], sunshine=[32767, 32767, 32767, 32767,…], dewPoint2m=[46, 41, 39,…], surfacePressure=[10216, 10218, 10217, …], isDay=[false, false, …], cloudCoverTotal=[], temperatureStd=[…, 12, 14, 14, 13, 12,…], icon=[32767, 32767,…], icon1h=[4, 4, …], precipitationProbablity=null, precipitationProbablityIndex=null], forecast2=Forecast[stationId=02559, start=1747260000000, timeStep=10800000, temperature=[], windSpeed=null, windDirection=null, windGust=null, precipitationTotal=[0, 0, …], sunshine=[32767, 32767, …], surfacePressure=[10145, 10145, …], isDay=[false, false,…], cloudCoverTotal=[], temperatureStd=[], icon=[…], precipitationProbablity=null, precipitationProbablityIndex=null], forecastStart=null, warnings=[], threeHourSummaries=null]}}\""}], role=TOOL, name=spring_ai_mcp_client_weather_getStationOverview, toolCallId=call_3Q3csYoAzo2qPsHKFOMbFQ1L, toolCalls=null, refusal=null, audioOutput=null]], model=gpt-4o-mini, store=null, metadata=null, frequencyPenalty=null, logitBias=null, logprobs=null, topLogprobs=null, maxTokens=null, maxCompletionTokens=null, n=null, outputModalities=null, audioParameters=null, presencePenalty=null, responseFormat=null, seed=null, serviceTier=null, stop=null, stream=false, streamOptions=null, temperature=0.7, topP=null, tools=[org.springframework.ai.openai.api.OpenAiApi$FunctionTool@372faade, org.springframework.ai.openai.api.OpenAiApi$FunctionTool@5c334418, org.springframework.ai.openai.api.OpenAiApi$FunctionTool@23b4be7c], toolChoice=null, parallelToolCalls=null, user=null, reasoningEffort=null]] as "application/json" with org.springframework.http.converter.json.MappingJackson2HttpMessageConverter

This example demonstrates that sometimes the context needs to be defined in the prompt for the LLM to correctly interpret the data. Creating such prompts is not always straightforward. An iterative approach and prompt validation with the LLM significantly aid this process.

Conclusion and Outlook

The Model Context Protocol provides a structured, language-independent, and extensible approach for communication between AI models and external tools. Thanks to the clear separation between client and server, as well as support for standardized transport mechanisms such as stdio and SSE, MCP can be applied in a wide range of scenarios, ranging from local development environments to cloud-based applications.

The MCP Java SDK and the Spring AI integration offer developers a powerful, standardized toolset for consuming existing MCP servers and providing their own services. Seamless integration with Spring Boot enables rapid implementation without giving up proven Spring concepts.

The weather service example demonstrates how easily domain-specific functionality can be implemented as an MCP server using clear interfaces: annotations for tool descriptions and automatic exposure via HTTP. All code examples shown in the article, along with additional ones, are available in full on GitHub [https://github.com/patbaumgartner/spring-ai-mcp-entwickler.de].

As AI-powered applications become more widespread, MCP is likely to establish itself as a standardized interface between models and tools. Looking ahead, the protocol holds strong potential for further integrations such as in IDEs, DevOps tools, or specialized business applications, both locally and in distributed system landscapes.

FAQ

What is the Model Context Protocol (MCP)?

The Model Context Protocol is a standardized, language-agnostic way to connect applications and AI models, letting tools and services supply contextual data and receive results in a consistent format.

Why use MCP with Spring AI?

Spring AI integrates MCP into the Spring ecosystem, so Java/Spring Boot apps can both consume external MCP servers and expose custom tools as MCP servers using familiar Boot auto-configuration and starters.

Which transport types does MCP support?

MCP supports stdio for local process communication and Server-Sent Events (SSE) over HTTP for networked communication.

How do I integrate a Node-based MCP server in a Spring Boot app?

Add the MCP client and model dependencies, then configure the client to launch the Node server (e.g., npx -y @h1deya/mcp-server-weather) via application.yml. Spring AI wires the client so your controller can call tools exposed by that server.

What Maven dependencies do I need on the client side?

Use spring-ai-starter-mcp-client to talk to MCP servers and a model starter like spring-ai-starter-model-openai for LLM access.

How do I build a custom MCP server with Spring Boot?

Add spring-ai-starter-mcp-server-webmvc to enable an SSE-based MCP server in your Boot app. Define tools and expose them via the provided auto-configurations.

How are tools registered and exposed?

Register tool providers (e.g., a WeatherService) with a ToolCallbackProvider, and annotate methods with @Tool (and @ToolParam for inputs). They’re then discoverable and callable via MCP.

What is the default server behavior once enabled?

The server exposes its MCP endpoints over HTTP using SSE (default Boot port 8080 unless configured otherwise), ready for any compatible MCP client.

How does MCP help with prompt enrichment?

Before sending a prompt to the model, Spring AI can invoke MCP tools (e.g., a weather service) and inject their results, improving accuracy and relevance. You can observe this in application logs.

Can I mix multiple tools and servers?

Yes. You can register multiple tools and connect to multiple MCP servers, composing domain-specific capabilities as your application grows.