by Dr. Carola Lilienthal

STAY TUNED!

Learn more about JAX London

Functional decomposition

This promise of salvation sounds good, but it involves many pitfalls, misunderstandings and challenges. A conversion to microservices is costly and, if introduced incorrectly, it can yield a result worse than the original architecture. Based on my experiences from customer projects of the past few years, I would like to separate the reasonable from the pointless and present pragmatic solutions.

Microservices: Why?

Microservices have emerged as a new architectural paradigm in recent years. Many developers and architects initially thought that microservices were only about splitting software systems into separately deployable services. But actually, microservices mean something completely different: Microservices deal with distributing software to development teams so that these teams can develop independently and autonomously faster than before. So for one thing, microservices are not about technology, but about people.

A powerful development team should be sized so that vacation time or illness do not lead to a standstill (so three people or more) and within which communication is manageable (so no more than seven to eight people who need to keep each other up to date). Such a team should now get a piece of software – a module for (further) development that is independent from the rest of the system. This is because the team can only make independent decisions and develop its module without having to wait for other teams and their deliveries if the module is autonomous.

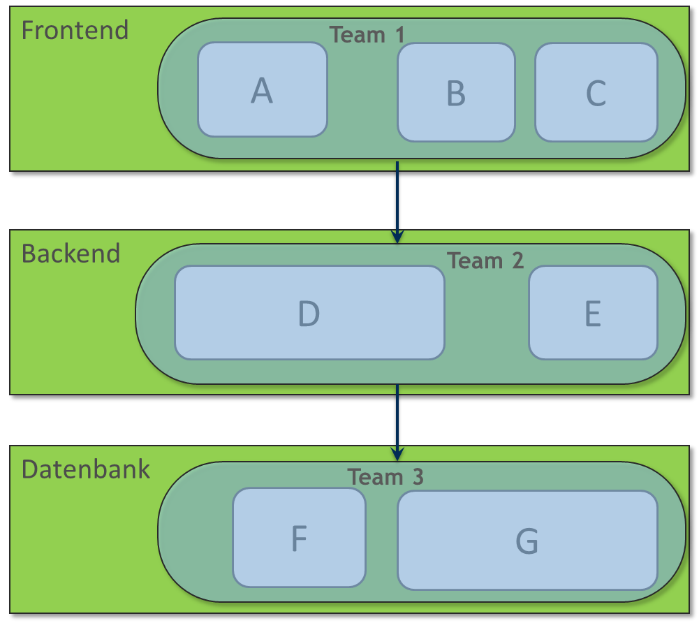

This independence from other teams is only possible if the software system is decomposed based on functional criteria. The use of technical criteria as a base lead to a situation where you have all types of front-end and back-end teams (Fig. 1). In such a technical team allocation, at a minimum, the front-end team depends on the back-end team to expand the front-end interface with the required features. If you have a database team in addition, the back-end team has no freedom either and has to wait for adaptations made by the database team. In such a team structure, new features almost always involve several teams that are dependent on each other in the implementation and need to communicate a lot with each other to make sure the interfaces are correct.

Fig. 1: Technical allocation of teams

In contrast, an allocation of teams based on functional criteria makes it possible for a team to be responsible for a functional module in the software, which runs through all the technical layers from the interface to the database (Fig. 2).

Fig. 2: Functional classification of teams

You should be able to assign new features each to a team and the associated module if the functional modules are successfully allocated. Of course, this is an idealistic vision – in practice, new features may lead to the need to rethink the module partitioning, because the new feature torpedoes the current technical decomposition. Or they lead to a situation in which the feature which is most likely a bit too big has to be skillfully decomposed into smaller features, such that the different teams can implement their respective functional part of the big feature independently of the other teams. The respective sub-features will then hopefully make sense on their own and can be delivered independently. However, the added value of the big feature will only be available to the user when all the teams involved have finished.

Technical decomposition: How does it work?

If you strive for a functional classification of your large monolith, the following question comes up: How do you find stable, independent functional partitions, along which decomposition is possible? The relatively vague concept of microservices provides no answer here. That’s why domain-driven design (DDD) by Eric Evans has gained in importance in recent years. In addition to many other best practices, DDD provides guidance on how you can functionally classify domains. Eric Evans transfers this functional classification into subdomains to software systems. The equivalent of subdomains in software is the bound context. If the subdomains are selected well and the bounded context is implemented accordingly in the software, you will achieve a good technical decomposition.

To achieve a good technical decomposition, we have found it useful in our projects to first put aside the monolith and the structure that may exist in it, and once again take a fundamental look at the functionalities, therefore the classification of the domains into subdomains. We usually start by providing an overview of our domain together with the users and subject matter experts. This can be done either by means of event storming or domain storytelling – two methods that are easy to understand for users and developers alike.

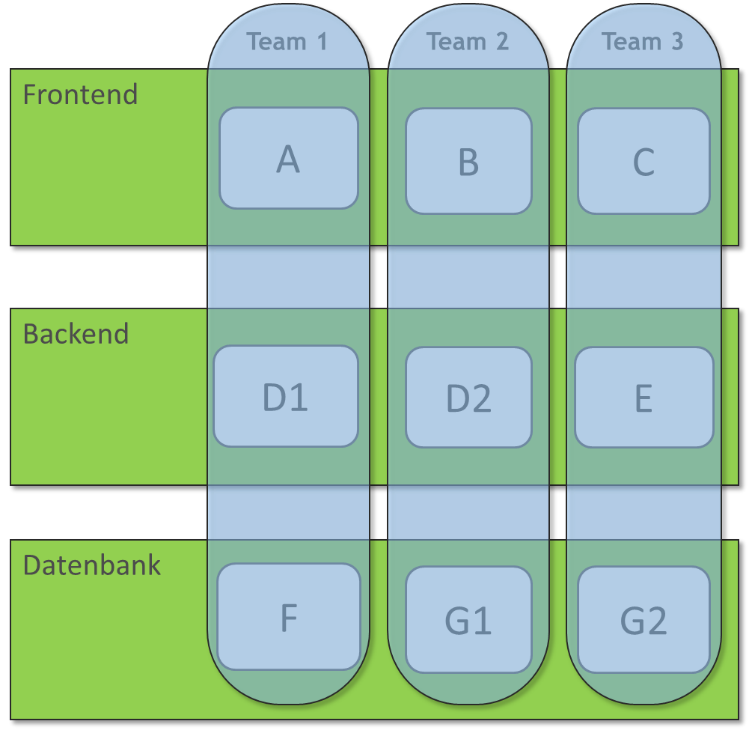

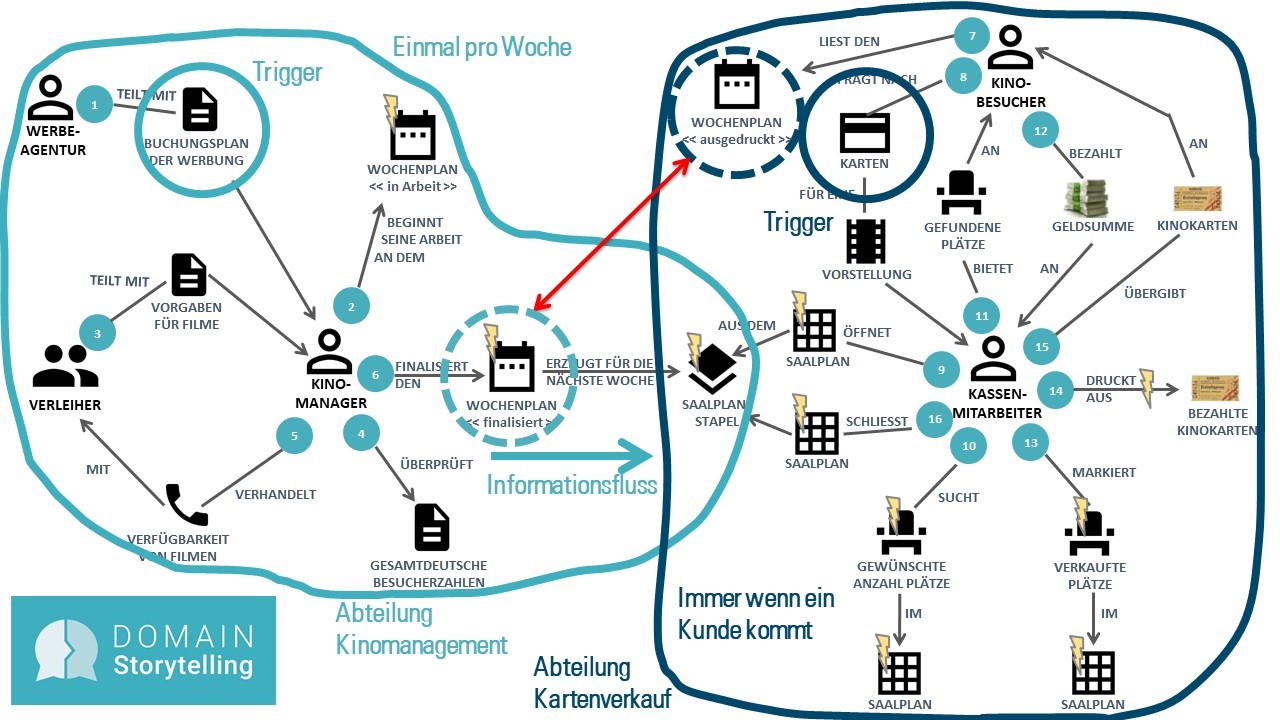

Figure 3 shows a domain story that was created with the users and developers of a small art house cinema. The fundamental question that we had asked ourselves during modeling was: who does what using what for what purpose? If you apply this question as a starting point, you can usually work out a common understanding of the domain very quickly.

Fig. 3: Overview domain story for an art house cinema

In this domain story, the advertising agency, the cinema manager, the renter, the cashier and the cinema audience can be identified as persons, roles or groups. The individual roles share documents and information, such as the advertising plan, film preferences and film availability. But they also work with “objects” from their domain, which are mapped in a software system – the weekly plan and the seating plan. These computer – supported objects are marked with a yellow flash in the domain story. The overview domain story starts at the top left with the number 1, where the advertising agency informs the cinema manager about the booking plan with the advertising, and ends at 16, when the cashier closes the seating plan.

This overview explains several indicators that help you partition a domain:

Departmental boundaries or different groups of domain experts suggest that the domain story contains multiple subdomains. In our example, you could imagine a cinema management department and a ticket sales department (Fig. 4).

If key concepts of the domains are used or defined differently by the different roles, this indicates multiple subdomains. In our example, the “weekly plan” key concept is defined much more extensively by the cinema manager than the printed weekly plan which the cinema visitor gets to see. For the cinema manager, the weekly plan contains not only the shows in the individual rooms, but also the planned advertising, ice cream sales and the cleaning staff. This information is irrelevant to the cinema audience (dashed circles in Fig. 4).

If the overview domain story contains subprocesses which are released by different triggers and run in different rhythms, then these subprocesses could build their own subdomains (solid circles in Fig. 4).

If there is an overview of process steps in which information flows in one direction only, this position could be a good starting point for a section between two subdomains (light blue arrow in Fig. 4).

Fig. 4: Overview domain story with subdomain boundaries

In really large enterprise applications, the overview domain stories are usually much larger and involve more steps. Even in our small art house cinema, the ice cream sellers and the cleaning staff – who will certainly interact with the software – are missing in the overview. However, the indicators that you need to look for in your overview domain story apply to both small and larger domains.

Transfer to the monolith

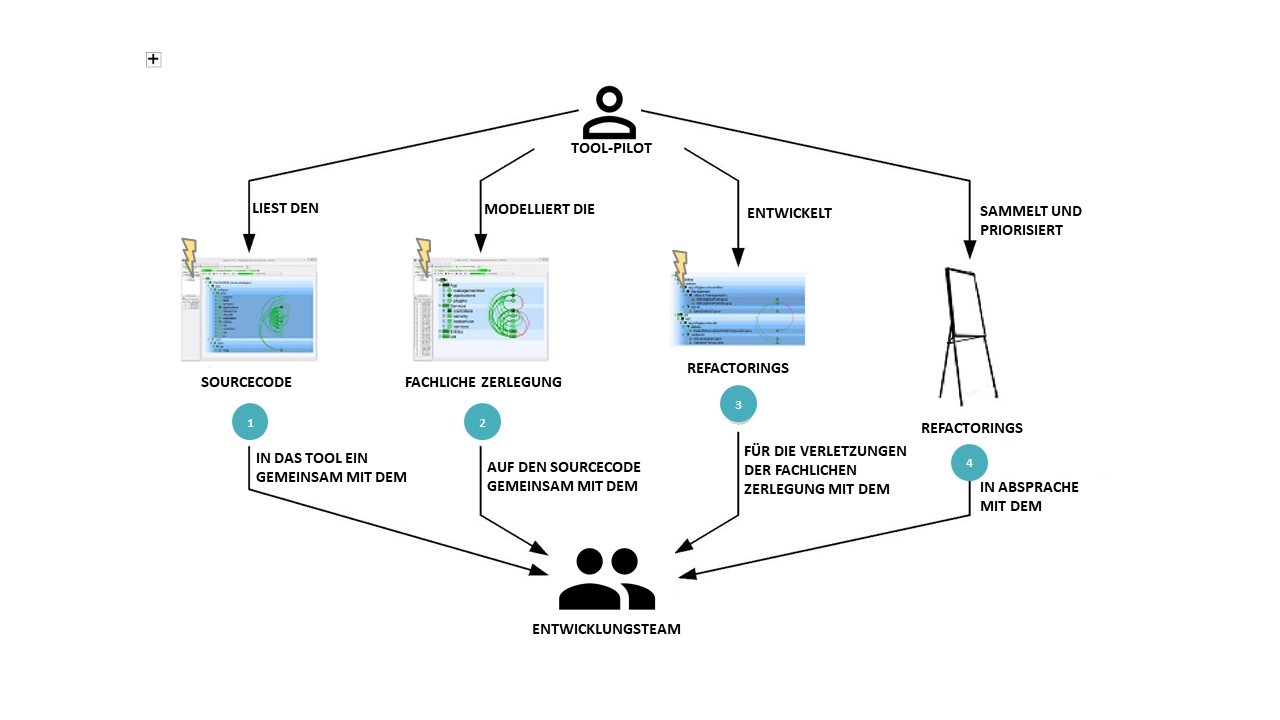

With the functional classifications into subdomains out of the way, we can now go back to the monolith and its structures. In this decomposition, we use architecture analysis tools that allow us to rebuild the architecture in the tool and define refactorings that are necessary for real conversion of the source code. A variety of tools are suitable here: Sotograph, Sonargraph, Structure101, Lattix, Teamscale, Axivion Bauhaus Suite and others.

Fig. 5: Mob Architecting with the team

In Figure 5, you can see how the decomposition of the monolith is done using an analysis tool. The analysis is carried out during a workshop by a tool pilot who is familiar with the respective tool and the programming language(s) used, together with all the architects and developers of the system. At the beginning of the workshop, the source code of the system is parsed using the analysis tool (Fig. 5, 1) and that way, the existing structures are recorded (for example, Build Units, Eclipse/VisualStudio Projects, Maven Modules, Package/Namespace/Directory Trees, Classes). The functional modules are now modeled on these existing structures (Fig. 5, 2), which correspond to the professional decomposition developed with the subject matter experts. At the same time, the whole team can see where the current structure is close to the technical decomposition and where you have clear deviations. Now the tool pilot, together with the development team, is looking for simple solutions as to how existing structures can be aligned with the technical decomposition by refactoring (Fig. 5, 3). These refactorings are collected and prioritized (Fig. 5, 4). Sometimes the tool pilot and the development team determine during the discussion that the solution chosen in the source code is better or more advanced than the technical decomposition from the workshops with the users. Sometimes, however, neither the existing structure nor the desired technical decomposition turn out to be the best solution, and both must be fundamentally reconsidered.

First modular, then micro

Armed with the refactorings found in this manner, work on the monolith can begin. Finally, we can decompose it into microservices. But stop! At least here we should ask ourselves the question of whether we actually want to decompose our system into individual deployable units or whether a well-structured monolith isn’t already sufficient. By splitting the monolith into separate deployables, you buy yet another level of complexity, namely the distribution. If you need distribution because the monolith is no longer powerful enough, then you need to take this step. You can get independent teams using the highly advanced build pipelines of today, but also in a well-structured monolith.

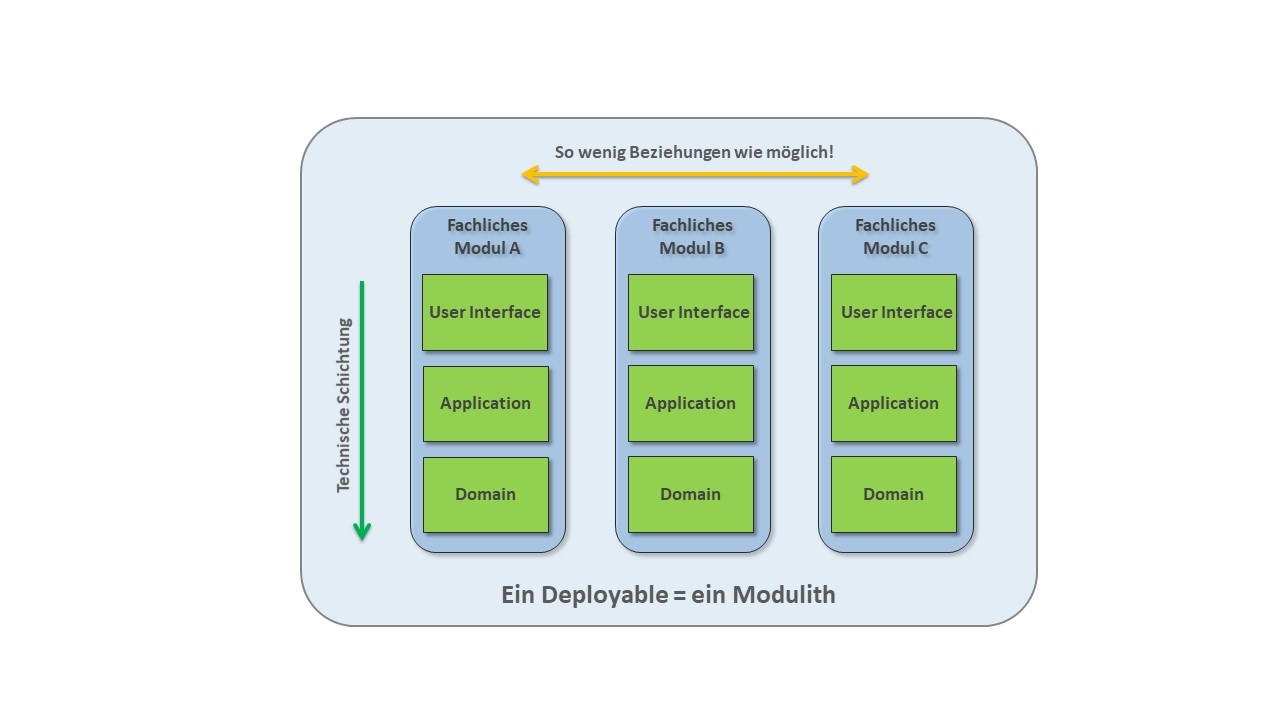

A well-structured monolith, therefore a modular monolith or (as Dr. Gernot Starke once said) “a modulith”, consists of individual functional modules that exist in a deployable (Fig. 6). In some architectures, the user interfaces of the individual functional modules are highly integrated. Details on this variant are beyond the scope this article and can be found in [1].

Fig. 6: The modulith, a well-structured monolith

By classifying it into modules that are as independent as possible, such a modulith implements a fundamental software-related principle of good software architecture: high cohesion and loose coupling. The classes in the individual subject modules each belong to a subdomain and can be found as a bound context in the software. In other words, these classes work together to complete a functional task and work together comprehensively. Therefore, there a high degree of cohesion within a functional module. In order to do their functional job, classes in one module should not need classes from other functional modules.

As a maximum, functional updates should be exchanged through modifications that might be interesting for other modules as events for example, while work orders that belong to another bound context should be transferred as a command to other modules. However, we should always make sure that these notifications are not proliferated or misused as disguised direct calls to other functional modules. Because loose coupling between functional modules means that there are as few relationships as possible. Loose coupling can never be achieved by using a technical event mechanism. Because technical solutions create a technical decoupling, but no functionally loose coupling.

Such a well-structured modulith is excellently prepared for possible later decomposition into microservices that may be needed because it already consists of technically independent modules. So the advantages here are obvious: Independent teams that can work quickly within the bounds of their bound context, and an architecture that is prepared for decomposition into multiple deployables.

Sticking point: the domain model

When I look at large monoliths, I usually find a canonical domain model there. This domain model is used from all parts of the software, and the classes in the domain model have very many methods and many attributes when compared to the rest of the system. The central domain classes, such as product, contract, customer, etc., are then usually the largest classes in the system. What happened?

Each developer who built new functionality into the system used the central classes of the domain model. However, these classes needed to be extended a bit, so that the new functionality could be implemented. That way, with each new functionality one or two new methods and attributes were added to the central classes That’s it right there! That’s how it happens! If I already have a class called product in the system and I develop functionality that the product needs, then I use the product class in the system and expand it to make it fit. This is because I want to reuse the existing class to only have to look in one place if the product fails. It’s a pity though that these new methods are not needed in the rest of the system, but were added for the new functionality only.

Domain-driven design and microservices head in the opposite direction at this point. In a modulith that is technically decomposed or in a distributed microservices architecture, for each bound context that uses the product class, there is an internal product class. This context-specific product class is tailored to its bound context and offers only those methods that are needed in this context. From our cinema example in Figure 3 and 4, the weekly plan appears twice in the technically decomposed system. First, we have it in the cinema management bound context with a comprehensive interface, through which you can schedule advertising for shows and budget the ice cream sales and the cleaning staff. And secondly, we see it in the ticket sales bound context, where the interface only allows you to search for films and query the film offer at specific times. Advertising, ice cream sales and the cleaning staff are irrelevant to ticket sales and are therefore not needed in the weekly schedule class in this bound context.

If you want to technically decompose a monolith, you have to break up the canonical domain model. This is a Herculean task in most large monoliths. The domain model-based parts of the system need to be interweaved with the classes of the domain model. To make progress here, we first copy the domain classes into each bound context. So, we duplicate code and then respectively restore these domain classes for their bound context. This gives us context-specific domain classes that can be extended and customized by the respective teams, independently of the rest of the system.

Of course, certain properties of product, such as the product ID and product name must remain the same in all bounded contexts. In addition, new products must be announced in all bound context when a new product is created in a bound context. This information is reported via updates from a bound context to all other bound contexts that work with products.

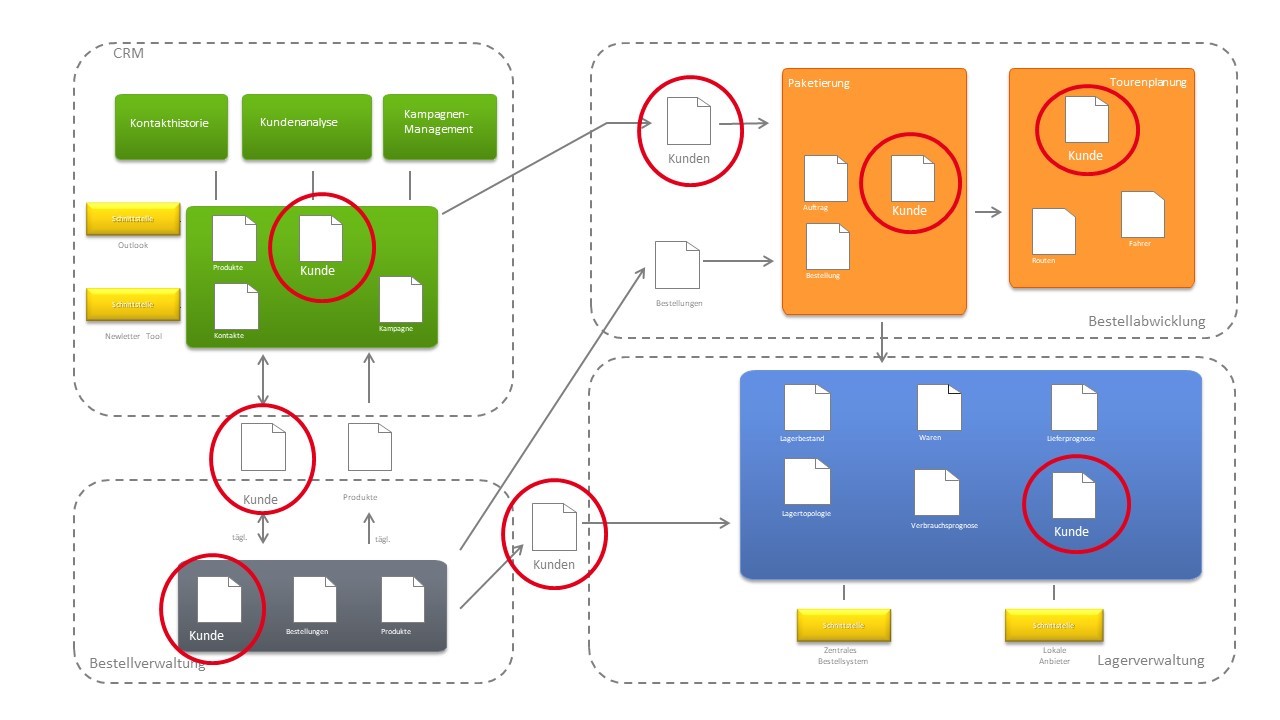

SOA is not a microservices architecture

When a system is decomposed in this manner, a structure is created that is surprisingly similar to an IT landscape from the early 2000s. Different systems (possibly from different manufacturers) are connected to each other via interfaces, but continue to operate independently on their own domain model. In Figure 7, you can see that there are customers in each of the four systems shown, and that customer data is exchanged through interfaces between the systems.

Fig. 7: IT landscape with the customer in all systems

At the beginning of the 2000s, we wanted to counteract this distribution of customer data by following service-oriented architectures (SOA) as an architectural style. This resulted in IT landscapes in which there was a central customer service that all other systems use via a service bus. Figure 8 presents a diagram of such a SOA with customer service.

In the light of the discussion about microservices, such a service-oriented architecture using centralized services has the crucial disadvantage that all development teams that need customer service can no longer work independently and thus lose their individual clout. At the same time, it is presumptuous to expect or demand that all mainframe systems, which ensure centralized data storage for many companies and can be addressed as SOA services, be converted to microservices architectures. In such cases, I often encounter a mix of SOA and microservices architecture, which can work quite well.

Fig. 8: IT landscape with SOA and customer service

Location determination

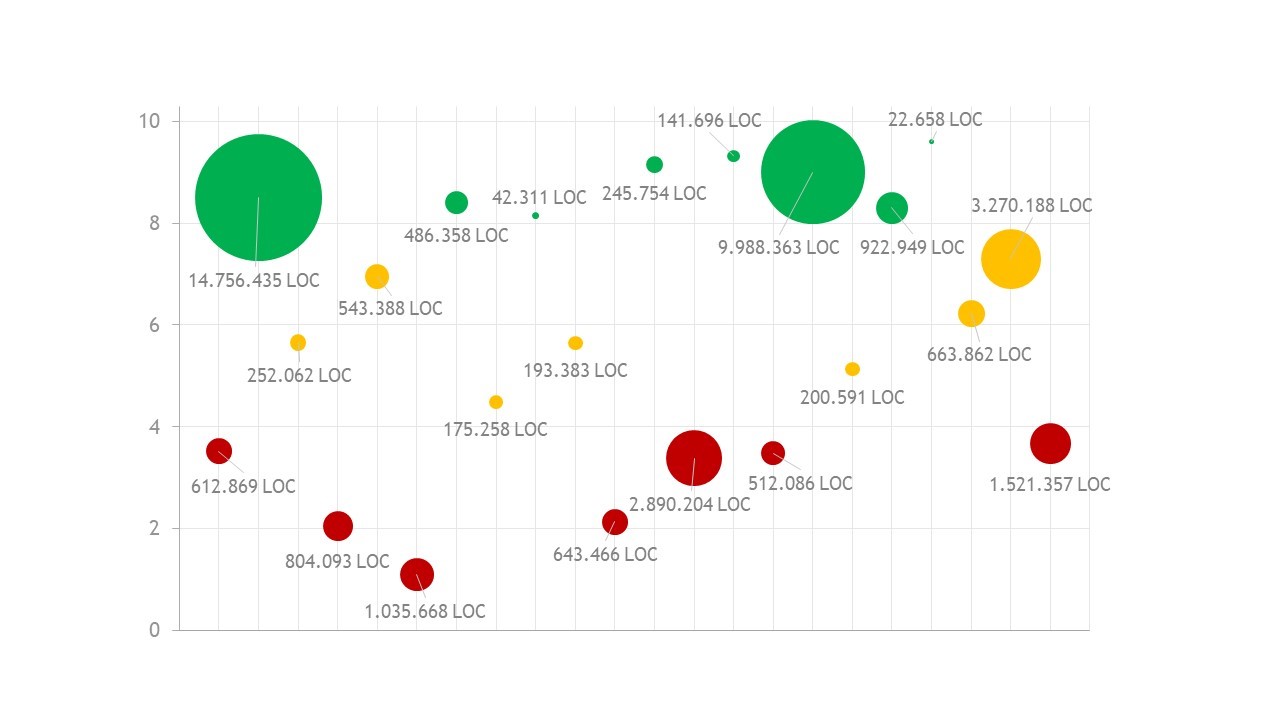

To assess how well the source code is prepared for technical decomposition for monoliths, we have developed the Modularity Maturity Index (MMI) in recent years. In Figure 9, we see a selection of 21 software systems analyzed over a five-year period (X-axis). For each system, the size is represented in Lines of Code (size of the point), while modularity is shown on a scale of 0 to 10 (Y-axis).

Fig. 9: Modularity Maturity Index (MMI)

If a system is rated between 8 and 10, it is already modular in design and can be decomposed with little effort. Systems rated between 4 and 8 have good approaches, but some refactoring is needed to improve modularity. Systems with a grade below 4 would be referred to as a Big Ball of Mud in domain-driven design. In such cases, you can hardly detect any functional structure, and everything is connected with everything. Such systems could be decomposed into functional modules only with a great deal of effort.

Conclusion

As a architecture style, microservices are still the talk of the town. In the meantime though, a number of organizations have experienced this type of architecture, and the challenges and mistakes have become clear.

To decompose a monolith in your own company, you first have to split the functional domains into subdomains and subsequently transfer this structure to the source code. In particular, the canonical domain model, our love for reutilization and the pursuit of service-oriented architectures are obstacles that must be overcome.